- Filebeat介绍及部署

- Filebeat介绍

- Filebeat和Logstash使用内存对比

- Filebeat部署

- Filebeat收集单类型日志到本地文件

- 配置Filebeat

- 检测本地数据文件

- Filebeat收集单类型多个日志到Logstash

- 配置Filebeat

- 配置Logstash输出到ES

- 验证数据

- Filebeat收集单类型多个日志到Redis

- 配置Filebeat

- 登录Redis验证数据

- Filebeat收集多类型日志到Redis

- 登录Redis验证数据

- 使用Logstash将beat放入redis的数据输出到ES

- 验证数据

- Filebeat收集多类型日志输出到多个目标

- 配置Filebeat

- 验证Redis数据和本地文件数据

Filebeat介绍及部署

Filebeat介绍

Filebeat附带预构建的模块,这些模块包含收集、解析、充实和可视化各种日志文件格式数据所需的配置,每个Filebeat模块由一个或多个文件集组成,这些文件集包含摄取节点管道、Elasticsearch模板、Filebeat勘探者配置和Kibana仪表盘。

Filebeat模块很好的入门,它是轻量级单用途的日志收集工具,用于在没有安装java的服务器上专门收集日志,可以将日志转发到logstash、elasticsearch或redis等场景中进行下一步处理。

Filebeat和Logstash使用内存对比

Logstash内存占用

[root@linux-node1 ~]# ps -ef | grep -v grep | grep logstash | awk '{print $2}'

12628

[root@linux-node1 ~]# cat /proc/12628/status | grep -i vm

VmPeak: 6252788 kB

VmSize: 6189252 kB

VmLck: 0 kB

VmHWM: 661168 kB

VmRSS: 661168 kB

VmData: 6027136 kB

VmStk: 88 kB

VmExe: 4 kB

VmLib: 16648 kB

VmPTE: 1888 kB

VmSwap: 0 kBFilebeat内存占用

[root@linux-node1 ~]# cat /proc/12750/status /proc/12751/status | grep -i vm

VmPeak: 11388 kB

VmSize: 11388 kB

VmLck: 0 kB

VmHWM: 232 kB

VmRSS: 232 kB

VmData: 10424 kB

VmStk: 88 kB

VmExe: 864 kB

VmLib: 0 kB

VmPTE: 16 kB

VmSwap: 0 kB

VmPeak: 25124 kB

VmSize: 25124 kB

VmLck: 0 kB

VmHWM: 15144 kB

VmRSS: 15144 kB

VmData: 15496 kB

VmStk: 88 kB

VmExe: 4796 kB

VmLib: 0 kB

VmPTE: 68 kB

VmSwap: 0 kBFilebeat部署

官方文档:https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-configuration-details.html

官网下载地址:https://www.elastic.co/downloads/beats/filebeat

#下载Filebeat安装包

[root@linux-node5 ~]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-5.3.2-x86_64.rpm

#安装Filebeat

[root@linux-node5 ~]# yum localinstall -y filebeat-5.3.2-x86_64.rpmFilebeat收集单类型日志到本地文件

配置Filebeat

#编辑Filebeat配置文件

[root@linux-node5 ~]# vim /etc/filebeat/filebeat.yml

filebeat.prospectors:

- input_type: log

paths:

- /usr/local/nginx/logs/access_json.log

#不收集的行

exclude_lines: ["^DBG","^$"]

#日志类型

document_type: ngx_log

output.file:

path: "/tmp"

filename: "zls_filebeat.txt"

#启动Filebeat(CentOS6)

[root@linux-node5 ~]# /etc/init.d/filebeat start

#启动Filebeat(CentOS7)

[root@linux-node5 ~]# systemctl start filebeat

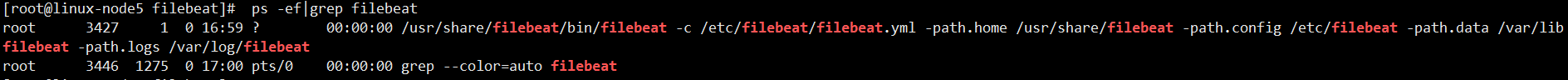

#检测进程

[root@linux-node5 ~]# ps -ef|grep filebeat

root 10881 1 0 01:06 pts/1 00:00:00 /usr/share/filebeat/bin/filebeat-god -r / -n -p /var/run/filebeat.pid -- /usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat

root 10882 10881 0 01:06 pts/1 00:00:00 /usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat

检测本地数据文件

#查看本地/tmp目录下内容

[root@linux-node5 ~]# ll /tmp/

-rw------- 1 root root 4513 Aug 2 17:20 zls_filebeat.txt

#查看日志内容

[root@linux-node5 ~]# cat /tmp/zls_filebeat.txt

{"@timestamp":"2019-08-02T09:20:22.599Z","beat":{"hostname":"linux-node5.98yz.cn","name":"linux-node5.98yz.cn","version":"5.3.3"},"input_type":"log","message":"{\"@timestamp\":\"2019-08-02T17:17:30+08:00\",\"host\":\"192.168.6.244\",\"clientip\":\"192.168.3.46\",\"size\":3700,\"responsetime\":0.000,\"upstreamtime\":\"-\",\"upstreamhost\":\"-\",\"http_host\":\"192.168.6.244\",\"url\":\"/index.html\",\"domain\":\"192.168.6.244\",\"xff\":\"-\",\"referer\":\"-\",\"status\":\"200\"}","offset":275,"source":"/var/log/nginx/access_json.log","type":"ngx_log"}

{"@timestamp":"2019-08-02T09:20:22.599Z","beat":{"hostname":"linux-node5.98yz.cn","name":"linux-node5.98yz.cn","version":"5.3.3"},"input_type":"log","message":"{\"@timestamp\":\"2019-08-02T17:17:30+08:00\",\"host\":\"192.168.6.244\",\"clientip\":\"192.168.3.46\",\"size\":368,\"responsetime\":0.000,\"upstreamtime\":\"-\",\"upstreamhost\":\"-\",\"http_host\":\"192.168.6.244\",\"url\":\"/nginx-logo.png\",\"domain\":\"192.168.6.244\",\"xff\":\"-\",\"referer\":\"http://192.168.6.244/\",\"status\":\"200\"}","offset":573,"source":"/var/log/nginx/access_json.log","type":"ngx_log"}Filebeat收集单类型多个日志到Logstash

配置Filebeat

#编辑Filebeat配置文件

[root@linux-node5 ~]# vim /etc/filebeat/filebeat.yml

filebeat.prospectors:

- input_type: log

paths:

- /usr/local/nginx/logs/access_json.log

- /usr/local/nginx/logs/access.log

exclude_lines: ["^DBG","^$"]

document_type: ngx_zls

output.logstash:

#logstash 服务器地址,可以是多个

hosts: ["192.168.6.241:6666"]

#是否开启输出至logstash,默认即为true

enabled: true

#工作线程数

worker: 1

#压缩级别

compression_level: 3

#多个输出的时候开启负载

# loadbalance: true

#重启Filebeat

[root@linux-node5 ~]# /etc/init.d/filebeat stop

Stopping filebeat: [确定]

[root@linux-node5 ~]# rm -f /var/lib/filebeat/registry

[root@linux-node5 ~]# /etc/init.d/filebeat start配置Logstash输出到ES

#进入Logstash配置文件目录

[root@linux-node1 ~]# cd /etc/logstash/conf.d/

#编辑Logstash配置文件

[root@elkstack03 conf.d]# vim beats.conf

input {

beats {

port => 6666

codec => "json"

}

}

output {

elasticsearch {

hosts => ["192.168.6.241:9200"]

index => "%{type}-%{+YYYY.MM.dd}"

}

}

#启动Logstash

[root@linux-node1 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/beats.conf &验证数据

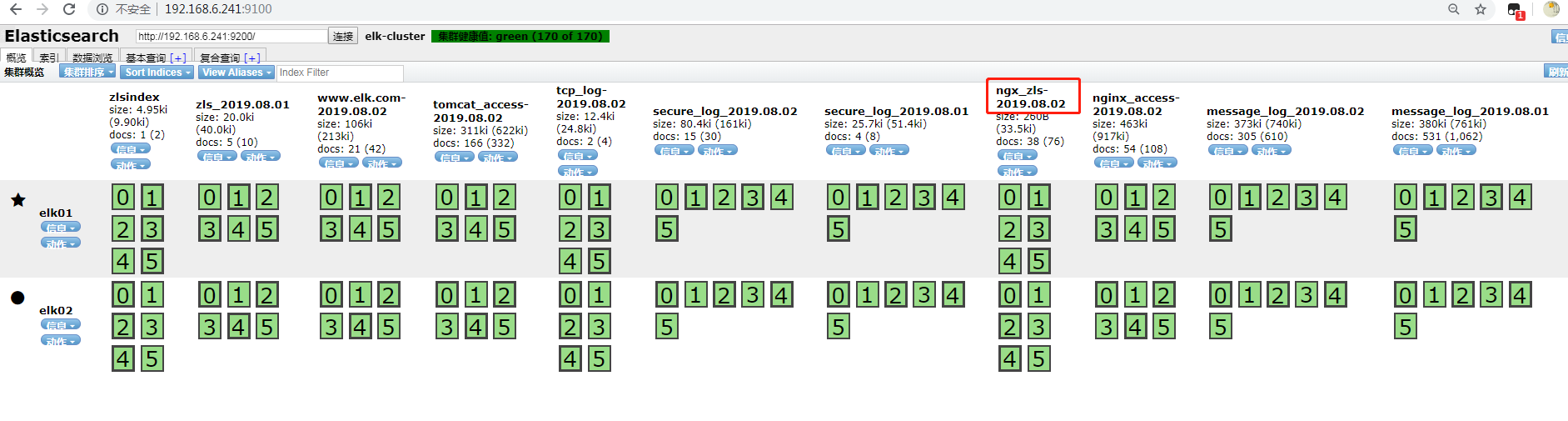

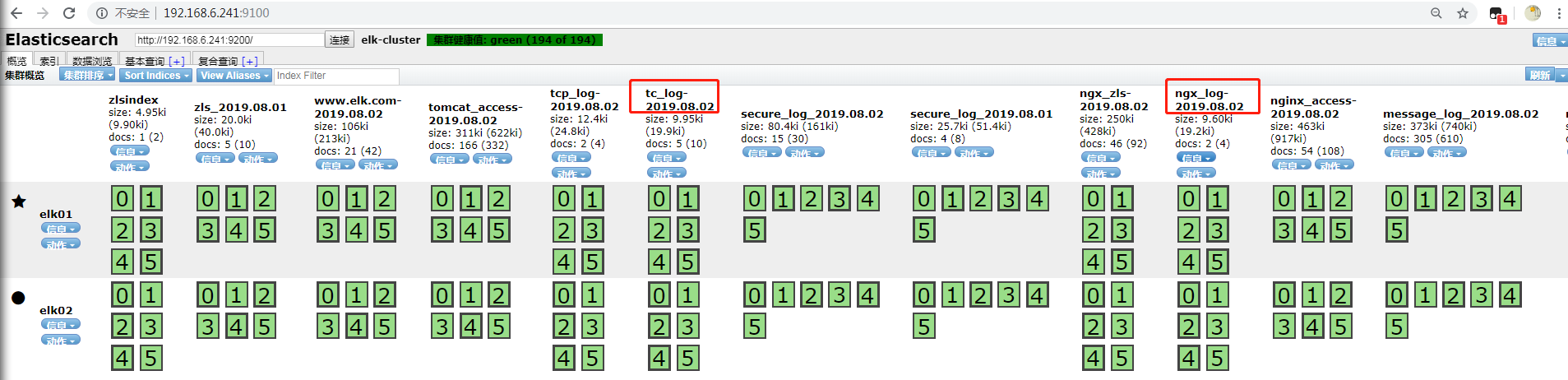

打开浏览器,访问:http://192.168.6.241:9100/

Filebeat收集单类型多个日志到Redis

配置Filebeat

#编辑Filebeat配置文件

[root@linux-node5 ~]# vim /etc/filebeat/filebeat.yml

filebeat.prospectors:

- input_type: log

paths:

- /usr/local/nginx/logs/access_json.log

- /usr/local/nginx/logs/access.log

#不收集的行

exclude_lines: ["^DBG","^$"]

#日志类型

document_type: www.driverzeng.com

output.redis:

hosts: ["192.168.6.243:6379"]

#Redis中的key名称

key: "nginx"

#使用1库

db: 2

#设置超时时间

timeout: 5

#redis密码

password: zls

#重启Filebeat

[root@linux-node5 ~]# systemctl stop filebeat.service

[root@linux-node5 ~]# rm -f /var/lib/filebeat/registry

[root@linux-node5 ~]# systemctl start filebeat.service 登录Redis验证数据

#登录Redis

[root@linux-node5 ~]# redis-cli -a zls

#查看所有key

127.0.0.1:6379> KEYS *

1) "nginx"

#查看nginx key长度

127.0.0.1:6379> LLEN nginx

(integer) 218

#取出一条日志

127.0.0.1:6379> LPOP nginx

"{\"@timestamp\":\"2019-08-02T09:50:18.113Z\",\"beat\":{\"hostname\":\"linux-node5.98yz.cn\",\"name\":\"linux-node5.98yz.cn\",\"version\":\"5.3.3\"},\"input_type\":\"log\",\"message\":\"{\\\"@timestamp\\\":\\\"2019-08-02T17:41:52+08:00\\\",\\\"host\\\":\\\"192.168.6.244\\\",\\\"clientip\\\":\\\"192.168.3.46\\\",\\\"size\\\":0,\\\"responsetime\\\":0.000,\\\"upstreamtime\\\":\\\"-\\\",\\\"upstreamhost\\\":\\\"-\\\",\\\"http_host\\\":\\\"192.168.6.244\\\",\\\"url\\\":\\\"/index.html\\\",\\\"domain\\\":\\\"192.168.6.244\\\",\\\"xff\\\":\\\"-\\\",\\\"referer\\\":\\\"-\\\",\\\"status\\\":\\\"304\\\"}\",\"offset\":272,\"source\":\"/usr/local/nginx/logs/access_json.log\",\"type\":\"www.driverzeng.com\"}"Filebeat收集多类型日志到Redis

#修改Filebeat配置文件

[root@linux-node5 conf.d]# vim /etc/filebeat/filebeat.yml

filebeat.prospectors:

- input_type: log

paths:

- /usr/local/nginx/logs/access_json.log

#不收集的行

exclude_lines: ["^DBG","^$"]

#日志类型

document_type: ngx_log

- input_type: log

paths:

- /usr/local/tomcat/logs/tomcat_access_log*.log

#不收集的行

exclude_lines: ["^DBG","^$"]

#日志类型

document_type: tc_log

output.redis:

hosts: ["192.168.6.243:6379"]

#Redis中的key名称

key: "tomcat_nginx"

#使用3库

db: 3

#设置超时时间

timeout: 5

#redis密码

password: zls

#重启Filebeat

[root@linux-node5 ~]# systemctl stop filebeat.service

[root@linux-node5 ~]# rm -f /var/lib/filebeat/registry

[root@linux-node5 ~]# systemctl start filebeat.service 登录Redis验证数据

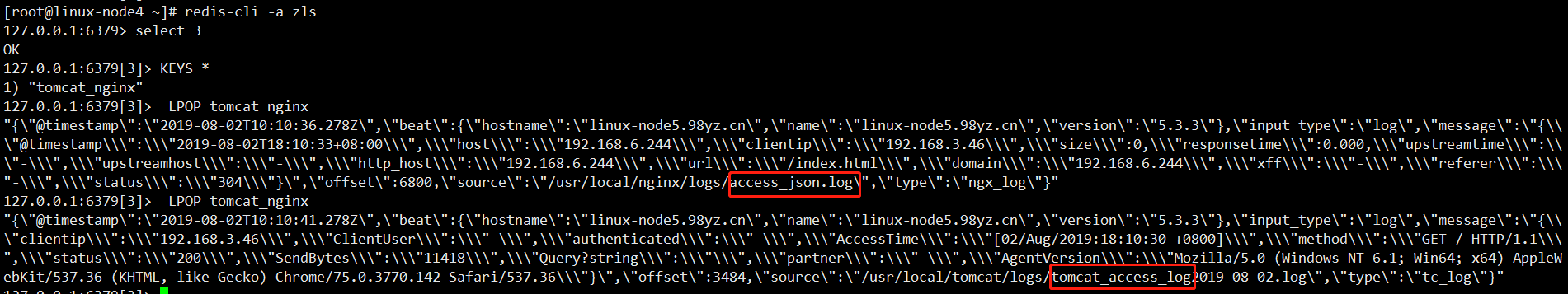

[root@linux-node4 ~]# redis-cli -a zls

127.0.0.1:6379> select 3

OK

127.0.0.1:6379[3]> KEYS *

1) "tomcat_nginx"

127.0.0.1:6379[3]> LPOP tomcat_nginx

"{\"@timestamp\":\"2019-08-02T10:10:36.278Z\",\"beat\":{\"hostname\":\"linux-node5.98yz.cn\",\"name\":\"linux-node5.98yz.cn\",\"version\":\"5.3.3\"},\"input_type\":\"log\",\"message\":\"{\\\"@timestamp\\\":\\\"2019-08-02T18:10:33+08:00\\\",\\\"host\\\":\\\"192.168.6.244\\\",\\\"clientip\\\":\\\"192.168.3.46\\\",\\\"size\\\":0,\\\"responsetime\\\":0.000,\\\"upstreamtime\\\":\\\"-\\\",\\\"upstreamhost\\\":\\\"-\\\",\\\"http_host\\\":\\\"192.168.6.244\\\",\\\"url\\\":\\\"/index.html\\\",\\\"domain\\\":\\\"192.168.6.244\\\",\\\"xff\\\":\\\"-\\\",\\\"referer\\\":\\\"-\\\",\\\"status\\\":\\\"304\\\"}\",\"offset\":6800,\"source\":\"/usr/local/nginx/logs/access_json.log\",\"type\":\"ngx_log\"}"

127.0.0.1:6379[3]> LPOP tomcat_nginx

"{\"@timestamp\":\"2019-08-02T10:10:41.278Z\",\"beat\":{\"hostname\":\"linux-node5.98yz.cn\",\"name\":\"linux-node5.98yz.cn\",\"version\":\"5.3.3\"},\"input_type\":\"log\",\"message\":\"{\\\"clientip\\\":\\\"192.168.3.46\\\",\\\"ClientUser\\\":\\\"-\\\",\\\"authenticated\\\":\\\"-\\\",\\\"AccessTime\\\":\\\"[02/Aug/2019:18:10:30 +0800]\\\",\\\"method\\\":\\\"GET / HTTP/1.1\\\",\\\"status\\\":\\\"200\\\",\\\"SendBytes\\\":\\\"11418\\\",\\\"Query?string\\\":\\\"\\\",\\\"partner\\\":\\\"-\\\",\\\"AgentVersion\\\":\\\"Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36\\\"}\",\"offset\":3484,\"source\":\"/usr/local/tomcat/logs/tomcat_access_log2019-08-02.log\",\"type\":\"tc_log\"}"看的出来,tomcat日志和nginx日志都在一个key中

那么有人会问了,都在一个key中,日志不就混乱了么?我们该如何查看日志呢?接下来我们就来解决这个问题。

使用Logstash将beat放入redis的数据输出到ES

#进入Logstash配置文件目录

[root@linux-node1 ~]# cd /etc/logstash/conf.d/

#编辑Logstash配置文件

[root@elkstack03 conf.d]# vim beats_redis_es.conf

input {

redis {

host => "192.168.6.243"

port => "6379"

db => "3"

key => "tomcat_nginx"

data_type => "list"

password => "zls"

codec => "json"

}

}

output {

elasticsearch {

hosts => ["192.168.6.241:9200"]

index => "%{type}-%{+YYYY.MM.dd}"

}

}

#启动Logstash

[root@linux-node1 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/beats_redis_es.conf &验证数据

打开浏览器,访问:http://92.168.6.241:9100/

可以看到,我们通过Logstash利用type将日志区分开,分别输出到ES中,虽然在Redis中没有区分开,但是最终在ES中区分开了,那么添加到Kibana中,同样是两个日志。

Filebeat收集多类型日志输出到多个目标

配置Filebeat

我们将nginx日志 tomcat日志同时输出到Redis和本地文件中

[root@linux-node5 conf.d]# vim /etc/filebeat/filebeat.yml

filebeat.prospectors:

- input_type: log

paths:

- /usr/local/nginx/logs/access_json.log

#不收集的行

exclude_lines: ["^DBG","^$"]

#日志类型

document_type: ngx_log

- input_type: log

paths:

- /usr/local/tomcat/logs/tomcat_access_log.*.log

#不收集的行

exclude_lines: ["^DBG","^$"]

#日志类型

document_type: tc_log

output.redis:

#redis 服务器地址,可以是多个

hosts: ["192.168.6.243:6379"]

key: "tn"

db: 2

timeout: 5

password: zls

output.file:

path: "/tmp"

filename: "zls.txt"

#工作线程数

worker: 1

#压缩级别

compression_level: 3

#多个输出的时候开启负载

loadbalance: true

#重启Filebeat

[root@linux-node5 ~]# systemctl stop filebeat.service

[root@linux-node5 ~]# rm -f /var/lib/filebeat/registry

[root@linux-node5 ~]# systemctl start filebeat.service 验证Redis数据和本地文件数据

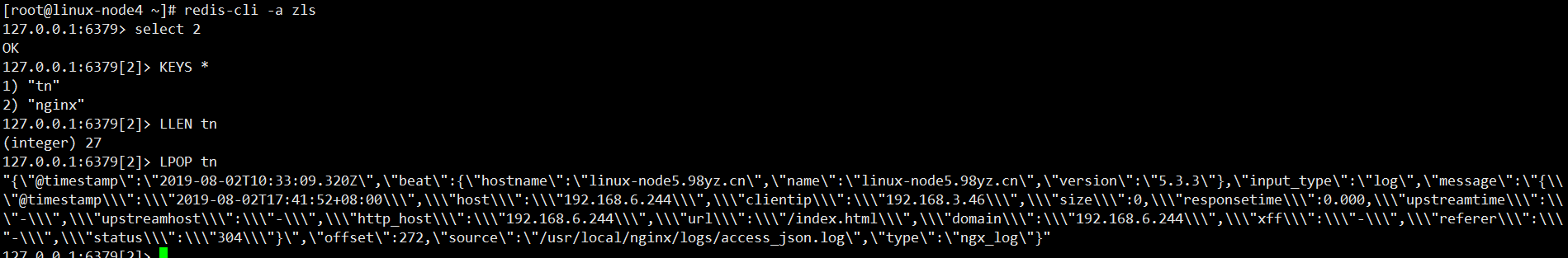

#登录redis

[root@elkstack04 ~]# redis-cli -a zls

#切换2库

127.0.0.1:6379> SELECT 2

OK

#查看所有key

127.0.0.1:6379[2]> KEYS *

1) "tn"

#查看key长度

127.0.0.1:6379[2]> LLEN tn

(integer) 27

#取出日志

127.0.0.1:6379[2]> LPOP tn

"{\"@timestamp\":\"2019-08-02T10:33:09.320Z\",\"beat\":{\"hostname\":\"linux-node5.98yz.cn\",\"name\":\"linux-node5.98yz.cn\",\"version\":\"5.3.3\"},\"input_type\":\"log\",\"message\":\"{\\\"@timestamp\\\":\\\"2019-08-02T17:41:52+08:00\\\",\\\"host\\\":\\\"192.168.6.244\\\",\\\"clientip\\\":\\\"192.168.3.46\\\",\\\"size\\\":0,\\\"responsetime\\\":0.000,\\\"upstreamtime\\\":\\\"-\\\",\\\"upstreamhost\\\":\\\"-\\\",\\\"http_host\\\":\\\"192.168.6.244\\\",\\\"url\\\":\\\"/index.html\\\",\\\"domain\\\":\\\"192.168.6.244\\\",\\\"xff\\\":\\\"-\\\",\\\"referer\\\":\\\"-\\\",\\\"status\\\":\\\"304\\\"}\",\"offset\":272,\"source\":\"/usr/local/nginx/logs/access_json.log\",\"type\":\"ngx_log\"}"

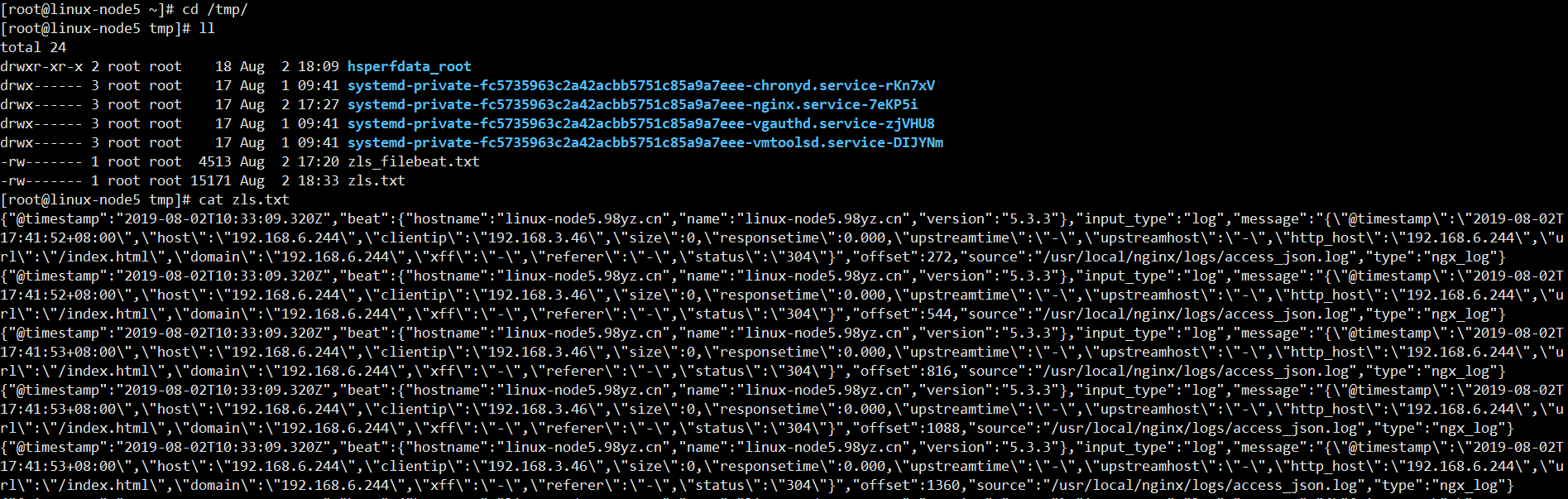

#进入tmp目录

[root@linux-node5 ~]# cd /tmp/

#查看文件是否生成

[root@linux-node5 tmp]# ll

total 24

drwxr-xr-x 2 root root 18 Aug 2 18:09 hsperfdata_root

drwx------ 3 root root 17 Aug 1 09:41 systemd-private-fc5735963c2a42acbb5751c85a9a7eee-chronyd.service-rKn7xV

drwx------ 3 root root 17 Aug 2 17:27 systemd-private-fc5735963c2a42acbb5751c85a9a7eee-nginx.service-7eKP5i

drwx------ 3 root root 17 Aug 1 09:41 systemd-private-fc5735963c2a42acbb5751c85a9a7eee-vgauthd.service-zjVHU8

drwx------ 3 root root 17 Aug 1 09:41 systemd-private-fc5735963c2a42acbb5751c85a9a7eee-vmtoolsd.service-DIJYNm

-rw------- 1 root root 4513 Aug 2 17:20 zls_filebeat.txt

-rw------- 1 root root 15171 Aug 2 18:33 zls.txt

#查看文件内容

[root@linux-node5 tmp]# cat zls.txt

{"@timestamp":"2019-08-02T10:33:09.320Z","beat":{"hostname":"linux-node5.98yz.cn","name":"linux-node5.98yz.cn","version":"5.3.3"},"input_type":"log","message":"{\"@timestamp\":\"2019-08-02T17:41:52+08:00\",\"host\":\"192.168.6.244\",\"clientip\":\"192.168.3.46\",\"size\":0,\"responsetime\":0.000,\"upstreamtime\":\"-\",\"upstreamhost\":\"-\",\"http_host\":\"192.168.6.244\",\"url\":\"/index.html\",\"domain\":\"192.168.6.244\",\"xff\":\"-\",\"referer\":\"-\",\"status\":\"304\"}","offset":272,"source":"/usr/local/nginx/logs/access_json.log","type":"ngx_log"}

{"@timestamp":"2019-08-02T10:33:09.320Z","beat":{"hostname":"linux-node5.98yz.cn","name":"linux-node5.98yz.cn","version":"5.3.3"},"input_type":"log","message":"{\"@timestamp\":\"2019-08-02T17:41:52+08:00\",\"host\":\"192.168.6.244\",\"clientip\":\"192.168.3.46\",\"size\":0,\"responsetime\":0.000,\"upstreamtime\":\"-\",\"upstreamhost\":\"-\",\"http_host\":\"192.168.6.244\",\"url\":\"/index.html\",\"domain\":\"192.168.6.244\",\"xff\":\"-\",\"referer\":\"-\",\"status\":\"304\"}","offset":544,"source":"/usr/local/nginx/logs/access_json.log","type":"ngx_log"}

{"@timestamp":"2019-08-02T10:33:09.320Z","beat":{"hostname":"linux-node5.98yz.cn","name":"linux-node5.98yz.cn","version":"5.3.3"},"input_type":"log","message":"{\"@timestamp\":\"2019-08-02T17:41:53+08:00\",\"host\":\"192.168.6.244\",\"clientip\":\"192.168.3.46\",\"size\":0,\"responsetime\":0.000,\"upstreamtime\":\"-\",\"upstreamhost\":\"-\",\"http_host\":\"192.168.6.244\",\"url\":\"/index.html\",\"domain\":\"192.168.6.244\",\"xff\":\"-\",\"referer\":\"-\",\"status\":\"304\"}","offset":816,"source":"/usr/local/nginx/logs/access_json.log","type":"ngx_log"}

文档更新时间: 2019-08-02 18:39 作者:李延召